In this article we’re going to have a look at some of the eventing patterns we have in Nimbus

In Command handling with Nimbus we saw how we deal with fire-and-forget commands. This time around we care about events. They’re still fire-and-forget, but the difference is that whereas commands are consumed by only one consumer, events are consumed by multiple consumers. They’re broadcast. Mostly.

To reuse our scenario from our previous example, let’s imagine that we have a subscription-based web site that sends inspirational text messages to people’s phones each morning.

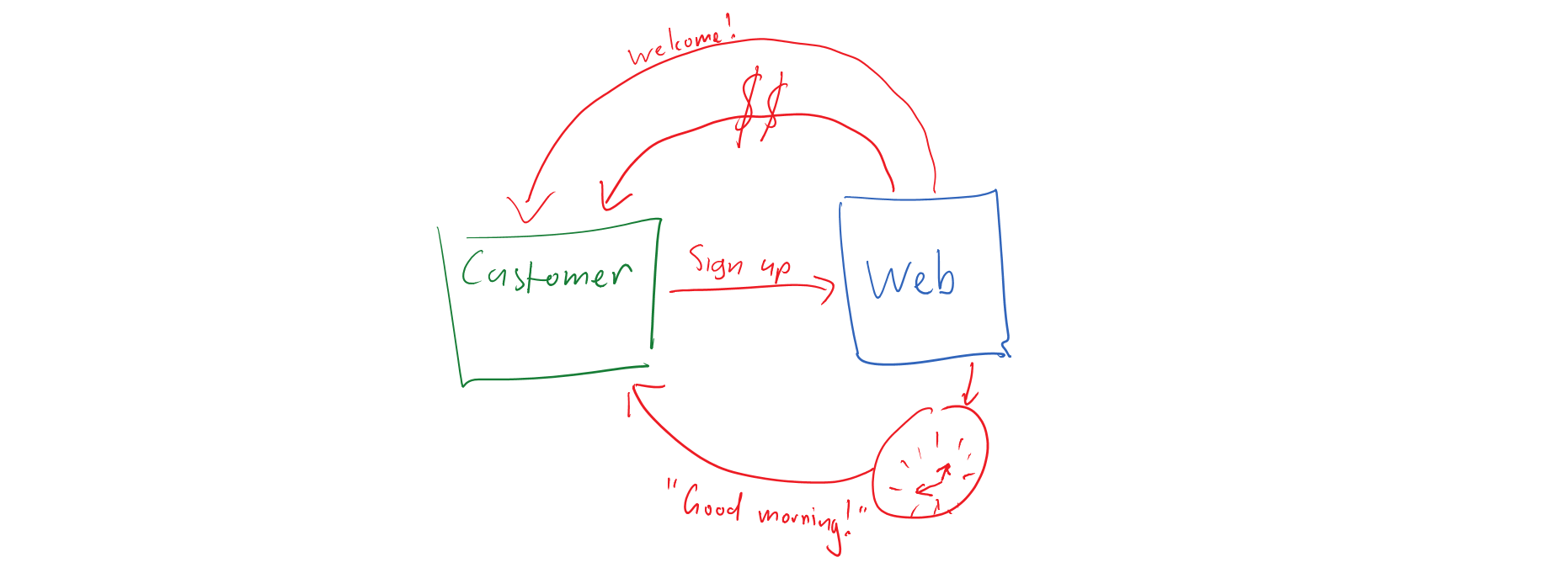

Scenario #1: Monolithic web application (aka Another Big Ball of Mud™).

We have a web application that handles everything from sign-up (ignoring for now where and how our data are stored) through to billing and the actual sending of text messages. That’s not so great in general, but let’s have a look at a few simple rules:

- When a customer signs up they should be sent a welcome text message.

- When a customer signs up we should bill them for their first month’s subscription immediately.

- Every morning at 0700 local time each customer should be sent an inspirational text.

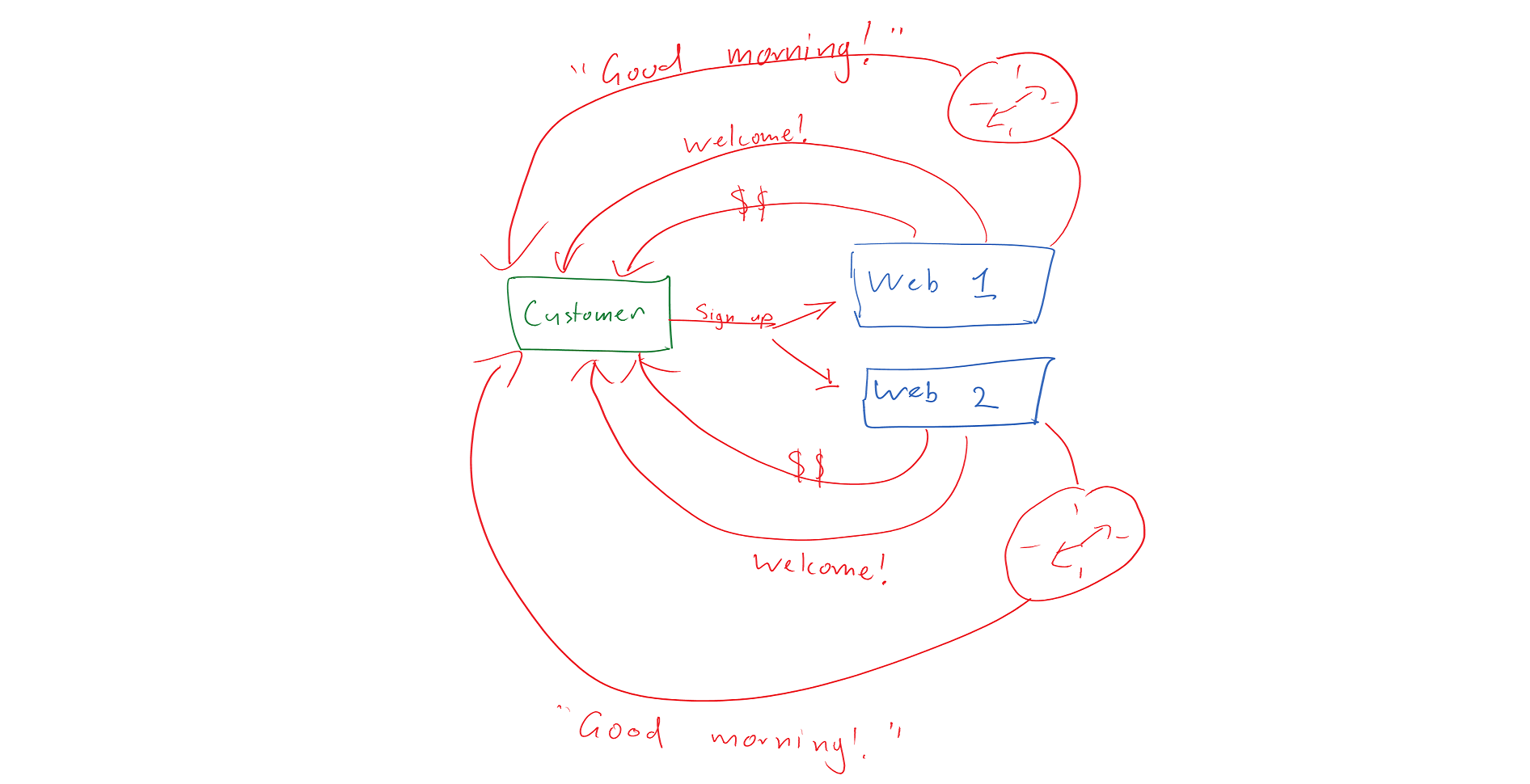

Business is great. (It really is amazing what people will pay for, isn’t it?) Actually… business is so great that we need to start scaling ourselves out. As we said before, let’s ignore the bit about where we store our data and assume that there’s just a repository somewhere that isn’t anywhere near struggling yet. Unfortunately, our web-server-that-does-all-the-things is starting to chug quite a bit and we’re getting a bit worried that we won’t see out the month before it falls over.

But hey, it’s only a web server, right? And we know about web farms, don’t we? Web servers are easy!

We provision another one…

… and things start to go just a little bit wrong.

Our sign-up still works fine - the customer will hit either one web server or the other - and our welcome message and initial invoice get generated perfectly happily, too. Unfortunately, every morning, our customer is now going to receive two messages: one from each web server. This is irritating for them and potentially quite expensive for us - we’ve just doubled our SMS delivery costs. If we were to add a third (or tenth) web server then we’d end up sending our customer three (or ten) texts per morning. This is going to get old really quickly.

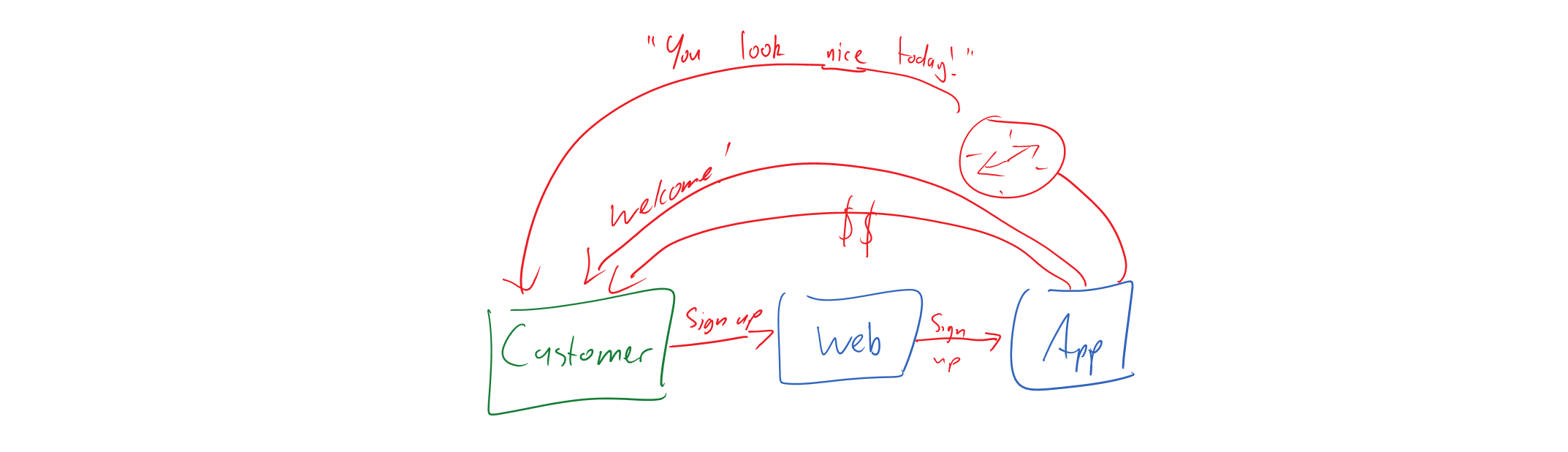

Scenario #2: Distributed architecture: a first cut

The obvious mistake here is that our web servers are responsible for way more than they should be. Web servers should… well… serve web pages. Let’s re-work our architecture to something sensible.

We’re getting there. This doesn’t look half-bad except that we’ve now simply moved our problem of scaling to one layer down. We can have as many web servers as we want, now, but as soon as we start scaling out our app servers we run into the same problem as in Scenario #2.

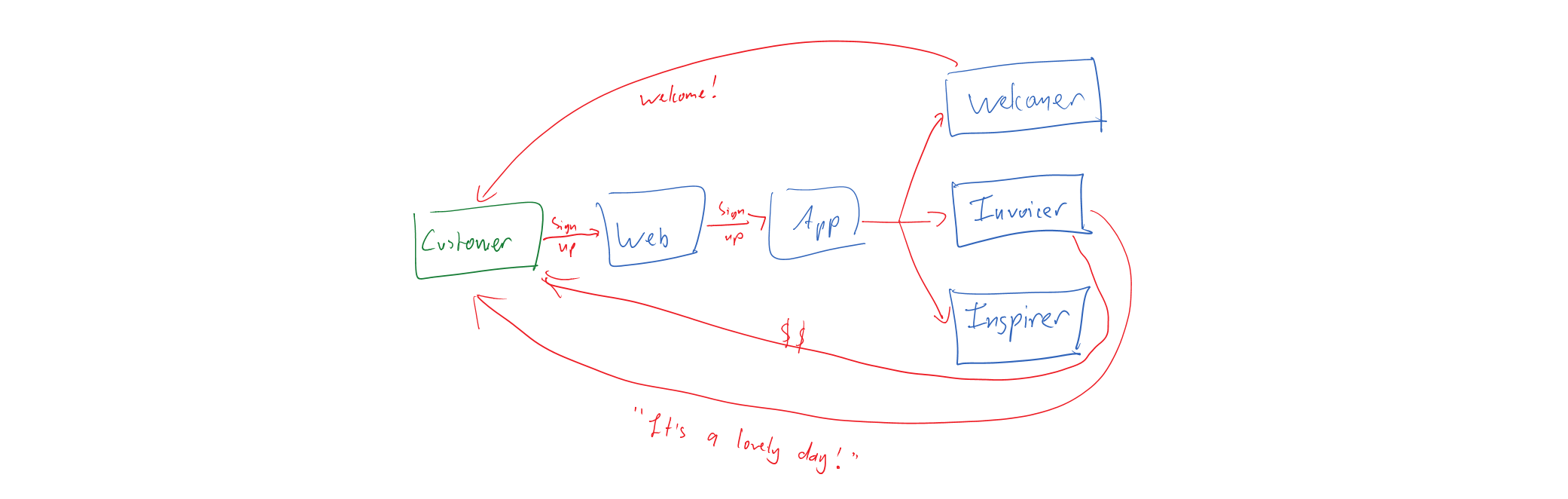

Scenario 3: Distributed event handlers

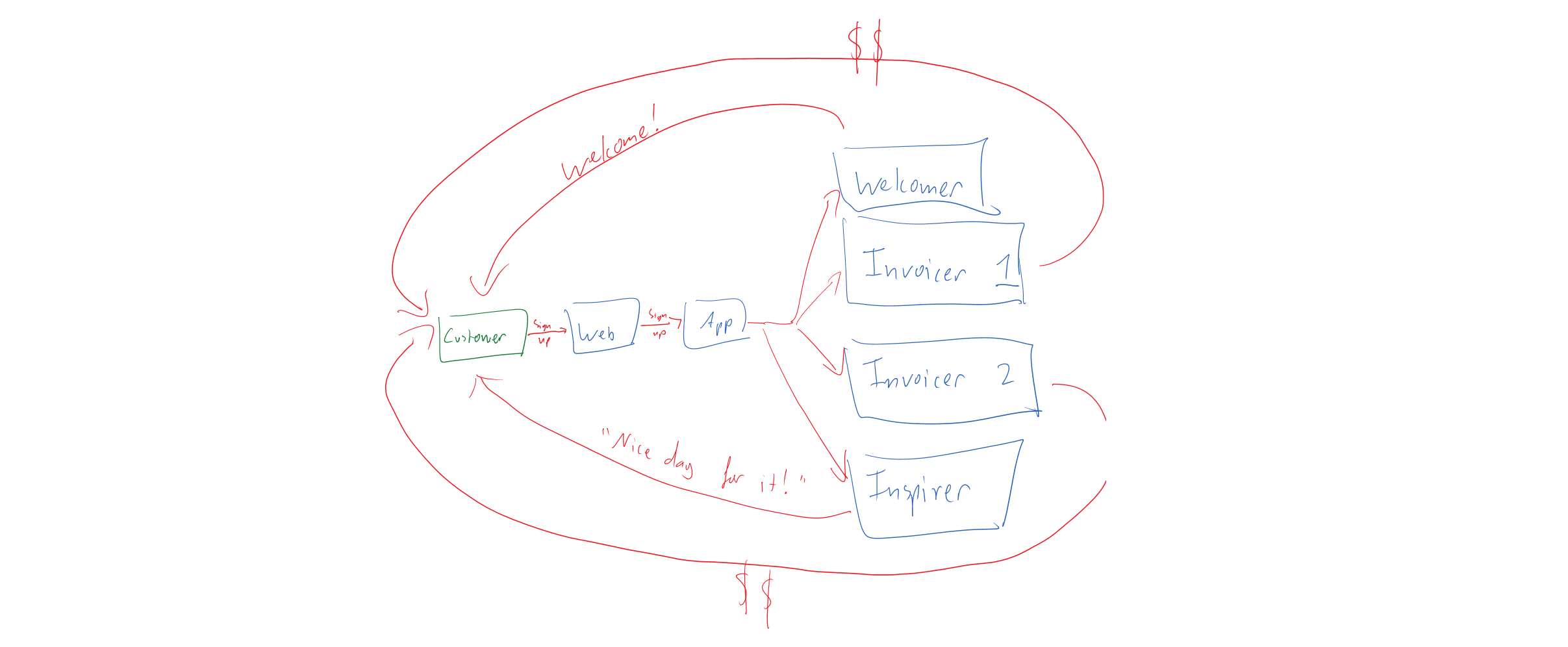

Our next step is to separate some responsibilities onto different servers. Let’s have a look at what that might look like:

This looks pretty good. We’ve split the load away from our app server onto a couple of different servers that have their own responsibilities.

This is the first example that’s actually worth writing some sample code for. Our code in this scenario could look something like this in our sign-up logic:

public async Task SignUp(CustomerDetails newCustomer)

{

// do sign-up stuff

await _bus.Publish(new CustomerSignedUpEvent(newCustomer));

}

and with these two handlers for the CustomerSignedUpEvent:

namespace WhenACustomerSignsUp

{

public class SendThemAWelcomeEmail: IHandleMulticastEvent<CustomerSignedUpEvent>

{

public async Task Handle<CustomerSignedUpEvent>(CustomerSignedUpEvent busEvent)

{

// send the customer an email

}

}

public class GenerateAnInvoiceForThem: IHandleMulticastEvent<CustomerSignedUpEvent>

{

public async Task Handle<CustomerSignedUpEvent>(CustomerSignedUpEvent busEvent)

{

// generate an invoice for the customer

}

}

}

We’re actually in pretty good shape here. But business is, by now, booming, and we’re generating more invoices than our single invoicer can handle. So we scale it out…

… and wow, but do the phones start ringing. Can you spot what we’ve done? Yep, that’s right - every instance of our invoicer is happily sending our customers an invoice. When we had one invoicer, each customer received one invoice and all was well. When we moved to two invoicers, our customers each received two invoices for the same service. If we were to scale to ten (or a thousand) invoicers then our customers would receive ten (or a thousand) invoices.

Our customers are not happy.

Scenario #4: Competing handlers

Here’s where we introduce Nimbus’ concept of a competing event handler. In this example:

public class GenerateAnInvoiceForThem: IHandleMulticastEvent<CustomerSignedUpEvent>

{

public async Task Handle<CustomerSignedUpEvent>(CustomerSignedUpEvent busEvent)

{

// generate an invoice for the customer

}

}

we implement the IHandleMulticastEvent<> interface. This means that every instance of our handler will receive a copy of the message. That’s great for updating read models, caches and so on, but not so great for taking further action based on events.

Thankfully, there’s a simple solution. In this case we want to use a competing event handler, like so:

public class GenerateAnInvoiceForThem: IHandleCompetingEvent<CustomerSignedUpEvent>

{

public async Task Handle<CustomerSignedUpEvent>(CustomerSignedUpEvent busEvent)

{

// generate an invoice for the customer

}

}

By telling Nimbus that we only want a single instance of each type of service to receive this event, we can ensure that our customers will only receive one invoice no matter much much we scale out.

A key concept to grasp here is that a single instance of each service type will receive the message. In other words:

- Exactly one instance of our invoicer will see the event

- Exactly one instance of our welcomer will see the event

Combining multicast and competing event handlers

It’s entirely possible that our invoicer will want to keep an up-to-date list of customers for all sorts of reasons. In this case, it’s likely that our invoicer will want to receive a copy of the CustomerSignedUpEvent even if it’s not the instance that’s going to generate an invoice this time around.

Our invoicer code might now look something like this:

namespace WhenACustomerSignsUp

{

public class GenerateAnInvoiceForThem: IHandleCompetingEvent<CustomerSignedUpEvent>

{

public async Task Handle<CustomerSignedUpEvent>(CustomerSignedUpEvent busEvent)

{

// only ONE instance of me will have this handler called

}

}

public class RecordTheCustomerInMyLocalDatabase: IHandleMulticastEvent<CustomerSignedUpEvent>

{

public async Task Handle<CustomerSignedUpEvent>(CustomerSignedUpEvent busEvent)

{

// EVERY instance of me will have this handler called.

}

}

}

So there we go. We now have a loosely-coupled system that we can scale out massively on demand, without worrying about concurrency issues.

This is awesome! But how do we send our inspirational messages every morning?

Sneak peek: have a look at the SendAt(…) method on IBus. We’ll cover that in another article shortly.